07. Limits of Parallelism

Limits of Parallelism

In this lesson, you'll learn about the theoretical and practical limitations of writing parallel programs.

ND079 JPND C2 L05 A06 Limits Of Parallelism V2

Theoretical Limits of Parallelism

Parallelism can enable your programs to do more work in less time, but you can't just keep adding more threads to the program an expect it to keep getting faster.

Your program can only benefit from parallelism to the extent that its tasks are parallelizable.

Amdahl's Law

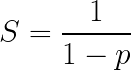

In 1967, a computer scientist named Gene Amdahl came up with an equation to represent this relationship:

- p is the fraction of the program that can be parallelized.

- S is how much the program could, in theory, speed up from parallelism.

SOLUTION:

5 hoursPractical Limits of Parallelism

Threads can also be expensive to create and maintain:

Each thread needs to have memory allocated for its call stack.

Since threads require operating system support, Java needs to make system calls to create and register new threads with the operating system. System calls are slow!

Java also internally tracks threads you create. This tracking requires some amount of time and memory.